The length in bytes of the cell that an asymmetric key or certificate can encode is severely restricted.

table 1: MAXIMUM “clear text” data cell size that can be encrypted

Encryption Method Algorithm max bytes length Asymmetric Key RSA_512 53 RSA_1024 117 RSA 2048 245 Certificate n/a 256 Symmetric Key all 8000

When only 53 bytes – including any salt- can be digested by an encryption method, the encryption method is of little practical use for cell encryption. Even the limited capabilities of longer asymmetric keys and certificates are easily exceeded by many requirements. Symmetric ciphers are able to accept 8000 bytes. When even larger documents must be encrypted there are no T-SQL options. Nonetheless, it is still of interest in algorithm selection to understand the relative costs differences – in terms of execution overhead and data explosion – among the handful of hashing and symmetric key algorithms available to SQL Server. This can be done by repeating an encryption and decryption operation using a set of static data. Using CRYPT_GEN_RANDOM to generate the static data and collecting comparable data points to also get an understanding of the cost of that method is also possible in this scenario.

Including PWDCOMPARE in the same test lends some motivational support for getting rid of that contraption if you happen to be using it. It disappears in SQL Server v.next anyway.

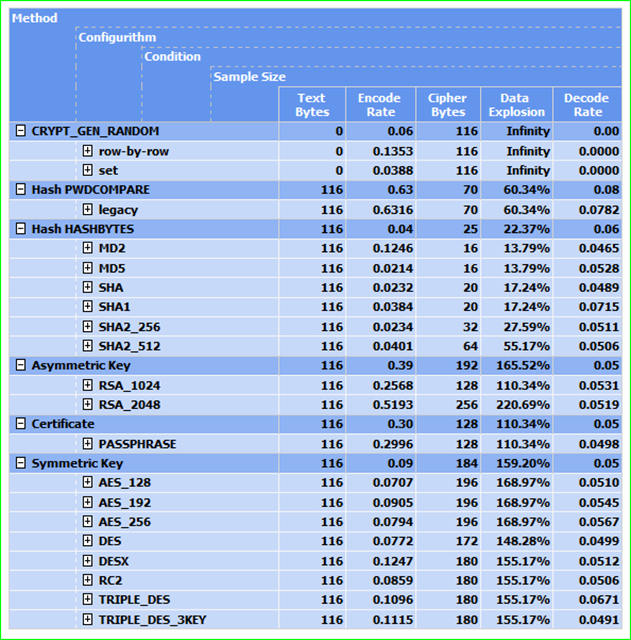

On final comment before presenting a chart of comparative test results: a “Configurithm” is a descriptive qualifier or a hashing algorithm or an asymmetric encryption algorithm or a symmetric encryption algorithm as relevant to the parent method: wunder normal form I reckon.

report 1: Relative throughput costs when hashing or encrypting with T-SQL

The complete test result is here Throughput.xlsx – click “View Raw” to open in your Excel. Honestly better to grab the test script (compare_throughput.sql) and use results from more representative hardware. The rate values will likely drop considerably, however the relative rate difference between methods and algorithms will stay about the same. Data explosion will not change at all. The VS2010 .rdl for the report is also in the project (Throughput.rdl).

June 7 2012 – After yesterday’s revelation of my and 6.4 million other user passwords hacked at the web site LinkedIn.com, Poul-Henning Kamp, the author of the MD5 algorithm has declared that algorithm no longer safe and advises replacement “without undo delay”

May 18 2016 – The report today is that the 2012 data thief/thieves actually hacked not 6.4 but 117 million Linked-In passwords. Wonder what the count will be in 2020? Or does it really matter? Seems like Linked-In has already changed their target away from human resources and toward the low ethics of becoming the low-cost interface for corporate people pimps. You may want to give a read to my post “Repel Dictionary Attacks with Checksum Truncation of the Random lambda” from 2011 to pour some salt into the wound…

Testing for this post was done using simple set based operation except for one sample generate (CRYPT_GEN_RANDOM|row-by-row) and one pass in the HASHBYTES|SHA1 test cycle. The details of the row-by-row hashing are not visible in this report view but notice that the SHA1 “Encode Rate” is skewed somewhat high in the progression. This is due mostly to the row-by-row test pass. If only the bare naked HASHBYTES operation (none = no salt) were used, the SHA1 Data Rate would be 0.0217.

method |algorithm | salt | text size|hash rate| cipher size | compare|

Not all comparisons from this table have equal validity. From the top level aggregation above -label Method – it can be seen that to generate a random NVARCHAR(58) using CRYPT_GEN_RANDOM takes longer than it does to hash those same 58 Unicode characters. While somewhat provocative from a mechanical perspective, there is no apples and apples way to compare hashing and encryption. They serve different purposes. More meaningful to compare hashing using the arcane PWDCOMPARE with HASHBYTES. Almost as meaningful to compare asymmetric and symmetric encryption methods though the length of the clear text to be encrypted will often eliminate any but the symmetric method.

In that light, comparing the behaviors of symmetric key algorithms is most interesting. As can be seen in the drilldown into the symmetric key test cycles the RowId columns was used in a test cycle for each algorithm. Yet only the AES_256 and DES algorithms were tested using no @authenticator and using a GUID instead of an integer as the @authenticator:

method |algorithm| authenticator | text size| encode | cipher size | decode |

It is not as overwhelmingly clear from this summary report that the Books Online embellished recommendation to “always use symmetric keys'” to encrypt cells is necessarily always valid. Granted, securing a value with symmetric key encryption appears to take 1/4th the times as encrypting the same value with a certificate and 1/5th the time as asymmetric key encryption. However, the data explosion advantage goes to the certificate among the 3 at only 110% of the storage requirement of the clear text value. That at least suggest a nice storage optimization (50% less explosion) for encrypted values of 256 bytes or less e.g. NVARCHAR(128) or VARBINARY(256) when the value is encrypted by a certificate rather than a symmetric key. Gee isn’t that the usage pattern for a password? Might be worth a look if you have lots-o-passwords… plus the certificate can be moved to another database or environment if necessary while secure T-SQL symmetric keys are sticky to the the database where created. It is important to bear in mind that symmetric key encryption leaves all other T-SQL encryption methods standing in the dust at 257 bytes. And also worthwhile to recognize that a portable symmetric key can be created though the burden to keep such a shared symmetric key secure is heavy if not unachievable.

There appears to be no clear performance advantage among methods when accessing the encrypted data; i.e. DECRYPTBYASYMKEY, DECRYPTBYCERT or DECRYPTBYKEY. Furthermore, newer algorithms tend to bear incrementally increasing costs over older algorithms. The cost progression appears to be in a well defined bandwidth. If nothing else this demonstrates the reality that algorithms will continue to evolve in lock-step with the processing power available for malicious use. Must be a correlation to Moore’s Law in there somewhere?

The numbers you see on the post should be used only as relative values in understanding the differences between the various cryptographic methods and available algorithms. While the aggregate rates shown do represent milliseconds per row, the metrics are taken from the (localdb) of a laptop.

The .NET 4 documentation makes the [overly simplified?] case that that symmetric algorithms are streaming while asymmetric algorithms are chunking:

Symmetric encryption and asymmetric encryption are performed using different processes. Symmetric encryption is performed on streams and is therefore useful to encrypt large amounts of data. Asymmetric encryption is performed on a small number of bytes and is therefore useful only for small amounts of data.-http://msdn.microsoft.com/en-us/library/as0w18af.aspx

In this post, the main focus is on gaining an understanding of the impact of different algorithms on encryption strategy scalability. In total, the results indicate that the vendor recommendation to always use a symmetric key to encrypt a cell is mostly acceptable, though there may be legitimate reasons to consider certificate encryption if passwords are saved rather than hashed. Especially in environments with clear division of responsibility.

Performance is not the reason to select an encryption algorithm anyway. Any more than compliance is the right standard for selecting an encryption strategy. The strategy and the algorithms used must adequately protect sensitive data. The application administrators must deal with any performance consequences and compliance requirements. Generally speaking the Secure Hashing Algorithms i.e., SHA, SHA1, SHA2-256 and SHA2_512 – all designed by the NSA – are recognized as secure. Likewise, the Advanced Encryption Standard i.e, AES_128, AES_192, and AES_256 symmetric key algorithms have been adopted by US government.

Before completing this test I had used a de facto “last algorithm added” strategy for algorithm selection. I rationalized this as a “the longer and stronger the better” rule of thumb. After this test, I think I will change my default algorithms to SHA2_256 for hashing and AES_256 for symmetric key encryption. Looks like the sweet spot to me.

I will also change the Encryption Hierarchy Administration’s passphrase manager to use the certificate directly rather than a symmetric key to encrypt secrets. Not because of the potential storage savings, but because I will gain some needed flexibility in the restore process. All I need is the Certificate and the cipher text to recall a phrase. The way I do this now, with a symmetric key I need the entire database to recall a phrase.

Coming up in the next posts I may finally begin describing some T-SQL cryptographic patterns…